Background

The property & casualty insurance industry continues to face challenging market conditions. Premium rates continue to drop while at the same time the economic slump results in exposure basis reductions. In the face of this premium shrinkage, carriers are trying to hold the line on expenses even as they strive for higher submission and policy counts to keep premium revenue up. Agents who face their own revenue pressures are now shopping more risks around and demand greater ease of doing business from their carriers. At the same time, underwriters are under constant pressure to improve underwriting quality and discipline. Through it all, internal processes are cumbersome, key systems are inflexible, and any changes involve major commitments of people, time, and money with uncertain results.

Challenging times indeed! I’m not fond of “perfect storm” analogies, but if you feel like George Clooney trying get his fishing boat up over that wave, or Mark Wahlberg at the end, stretched out in his survival suit one hundred miles from land, we need to talk!

It is time to modernize and optimize your underwriting processes, even in the face of challenging times. There are technologies and methods emerging that can do all kinds of interesting things, but before selecting the technology, we have to figure out what our new process should be. So let us consider what carriers really want their underwriting process to be, and then look at what technologies can get us there.

The carriers speak out

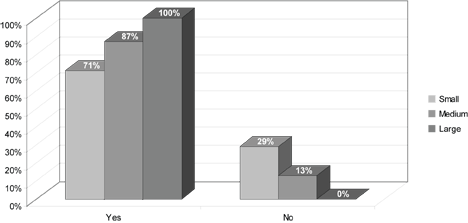

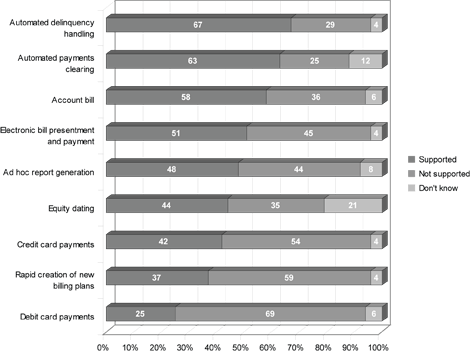

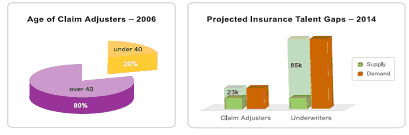

We conducted three separate research studies where we surveyed Commercial carrier CEOs and senior management for their input with regard to pain points, emerging technologies and underwriting management systems. Let us share with you some of our key findings.

1. Strategic Technology Investments to Combat the Soft Market – A Survey of Commercial Insurance Executives (Conducted by The Ward Group)

Meeting technology expectations of agents and employees is significant and often overlooked. Beyond the profit and loss improvements that technology investments are expected to deliver, there is a growing expectation among agents and employees, especially among younger professionals, that technology should be easy to use, friendly, and cutting-edge.

In this survey conducted by The Ward Group, commercial carrier CEOs were asked ten questions about technology implementation, how technology helps them compete against other insurance companies, and the use of technology for underwriting activities.

The findings clearly show that technology is recognized as a powerful competitive weapon. Eighty-five percent of executives polled indicated that technology can play a “significant role” or a “more than average role” in their companies’ ability to compete against other carriers.

Additional benefits that these executives expected from technology investments and a modernized underwriting system were:

- Improved underwriting productivity and reduced underwriting expense

- Reduced loss ratio

- Ease of doing business

- Better individual risk selection and pricing

- Better understanding of the entire book of business

- Streamlined processes and reduction of expenses

- Meeting expectations of agents and employees

The survey participants also provided, in their own words, what they believe are the most important ways to implement new systems or to invest in new technologies that will help in a soft market:

- “Technology is key to accomplishing underwriting and processing more efficiently….”

- “Make it quick and easy for the agent to do business and they are more apt to use your products in a soft market.”

- “If agents have to rekey to do business with us, they will place the business elsewhere.”

- “Automating underwriting rules will speed up policy processing and shorten turnaround time.”

- “Quickly understand at what price level a risk can be written and still make a profit.”

- “New systems and technologies…allow underwriters more time to review the risk and make more qualified underwriting decisions.”

- “Improved efficiencies give underwriters the opportunity to review more submissions.”

- “Technology can differentiate a company from competitors.”

2. Mid-Tier Carrier CEO Study (conducted by Phelon Group)

When surveyed about pain points, the predominant concern for mid-market P&C carriers (48%) is how to get profitable business on the books in the softening market. Executives recognize that their current underwriting processes are grossly inefficient, partly due to processes based on outdated legacy systems. However, they believe that their intellectual capital lies in their existing systems and analytics, and they are unwilling to walk away from that competitive advantage. Carriers are looking for ways to leverage this asset and to further codify their knowledge to get profitable business on the books.

Regarding underwriting challenges, executives chose the following priorities:

- Improving ease of doing business with agents (33%)

- Automation of underwriting (30%)

- Straight-through processing (30%)

- Integration with predictive analytics systems

- Management visibility

- Sharing of best practices

The participants shared with us some of their perspectives:

- “Getting the business on the books and pricing properly with respect to risk is my main concern. Profitability is key.”

- “Our legacy systems create huge inefficiencies and the bodies we need to process the underwriting are too heavy.”

- “It is hard to establish true and profitable pricing in a softening market…We need better tools to analyze trends and create pricing that accurately reflects the market.”

- “We looked to the market for a 3rd party solution, but we could not find one that met our customization requirements. Whatever we would choose would have to integrate with our system to leverage the investments we have already made in customizing our policies and pricing.”

- “We operate in a highly competitive market and need to make it easier to work with our agents.”

3. Magic Wand Survey (Conducted at NAMIC Commercial Lines UW Seminar)

Earlier this year, we asked senior and underwriting managers, “If you had a magic wand, what top benefits would you want from an underwriting automation system?”

- The overwhelming winner was increased efficiency and productivity. It got the most votes and the most number #1 votes.

- Tied for second place were both speed & agility and ease of use (for underwriters). Managers are looking for user-friendly, intuitive systems that will make it easy to do their jobs without adding complexity or requiring extensive training. At the same time, they are looking for agility, the ability to change their rules, data, and processes quickly to respond to changing market conditions.

- The fourth most popular response was ease of doing business with agents.

These four responses all address the need for better workflow and systems in the underwriting process. Additionally, our surveyor shared with us some insightful comments.

- “We want increased premium capacity with the same number of staff, for profitable growth.”

- “I want to reduce the number of people handling a submission and cut down on the back-and-forth questions between underwriters and agents.”

- “We want to improve the customer experience.”

- “The ideal system would allow our customer – the agents – to interact with our associates and view the system together. This would allow us to provide better service.”

The remaining responses included: integration of disparate systems into a unified underwriting desktop, management visibility, discipline & consistency, scale the business, and predictive modeling & analytics. (It is interesting to note that all of these items received some first and second place votes.)

How much we’ve spent, how little we’ve changed!

A few months ago I was involved in a discussion about the challenges of tracking submission activity and turnaround times. It reminded me of how little we have accomplished over the last 25 years. The question had to do with what to use as the received date/time for a submission – when it was received in the mailroom/imaging station, when the underwriting assistant got it, or when the underwriter got it. I realized that I had that same discussion with my business users 25 years ago. While certain steps had been automated in and of themselves, we still have basically the same processes, the same steps, the same people!

With today’s capabilities, a submission could be received through upload or agent portal entry (including supplemental data and attachments). Any necessary web services could already have been run in accordance with carrier rules (e.g., address scrubbing, geo-coding, financials, etc.) and attached to the account. It could immediately appear on the assistant’s or underwriter’s work queue. Submission tracking from that point could auto-magically be done by the system and available in real-time through a dashboard.

But that is not where most carriers are today. Generally, we have automated various individual steps, but the overall workflow is still a manually-controlled one, performed by the mailroom, imaging, clerical, and underwriting staff.

For example, we’ve spent millions of dollars to go paperless, but in many companies, underwriters still are pulling up electronic images and re-typing data into another system, just like we used to do with mailed-in or faxed-in paper. This is wonderful document management and forwarding, but is still the same old workflow. In fact, underwriting team members may be re-typing information into their rating engine and/or quoting system. They are probably re-typing into multiple web services like D&B, Choicepoint, geo-coding, engineering survey, loss control vendors, etc. And maybe they are still typing to get loss history, customer id’s, submission file labels, and who knows what else. (Take a little test: How often does your entire staff enter, type, or write the insured name, whether it is in a system, on a letter or form, on a label, in a web service, etc.? Once, twice, four times, seven times, ten times, more?)

How many of us still pass paper from one person to the other – underwriter to assistant, rater, or referral underwriter? How many of us still take hours or days to acknowledge receipt of a submission, to collect the supplemental data needed to underwrite it, to generate a quote, to get the agent’s feedback? How long does it normally take to resolve a 30-second issue between the underwriter and the agent or the underwriter and his/her supervisor? How long does it take to send, receive, research, make a decision, and reply to a referral? On the other hand, how long would it take if all the information were presented to the underwriter and manager in context, a click or two away, and the transmission was instant?

And that’s just what we do to ourselves. How does an agent feel about how we help him/her provide service to their customer?

Our business processes are constrained by our old systems and our old patterns. Our systems treat underwriting as a data entry process for policy administration instead of a unique workflow with its own set of players, sources of information, processes, and rules. And our ideas tend to be limited to this view of what is possible.

We need to break free of this mindset – to be able to see what is possible. Let’s start with a list of workflow “don’ts”, things that underwriters and agents shouldn’t have to do or use anymore:

- Tracking sheets

- Typing in data from a paper or from one system to the other

- Waiting for a paper file to be pulled or received

- Having to close one submission in the system to be able to access another

- Re-typing data into another system to get a loss control, loss history, credit report, MVR, VIN validation, etc.

- Searching through the underwriting manual or old emails to find that company directive on writing xxx LOB in yyy territory

- Agent entering 5 screens of data only to find out you don’t write that class at that size in that state

- Waiting two hours for an agent/underwriter to get back in the office to check their files on something

- Losing the account because someone misplaced the submission paperwork

- Finding out six months later that an agent/underwriter shouldn’t have quoted that account because it was outside your appetite or their authority level

- Collecting information, printouts, separate documents, and the underwriter’s notes to pass it on to the referral underwriter

- Reconciling your agent portal quote with your backend rating quote

Now let’s review what types of emerging technologies are ready for prime-time and then think about how we can do things better.

Emerging technologies

Over the last several years, there have been many exciting advances in technology and what you can do within the context of business operations. By and large, most of these are still on the wish-list for carriers and, for that matter, for insurance systems vendors. But these emerging technologies provide the new foundation to break free of the older system/technology constraints that have kept us stuck in our old workflows.

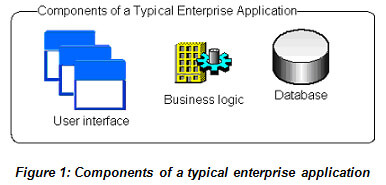

Service-Oriented Architecture

One of the most basic innovations is Service-Oriented Architecture (SOA). SOA breaks application systems into separate “services” that can receive input parameters, run, and return their result set to whoever invoked them. Each service acts like a building block that can be used and re-used in various contexts, like a Lego block. This allows applications to be assembled from appropriate services: You’d like to check the customer’s financial status? Just plug in a Dun & Bradstreet report. SOA provides a more flexible and more sustainable way to set up your enterprise applications.

Note that retrofitting existing applications into a service-oriented architecture can be challenging. Some major subsystems (e.g., rating, policy issuance) may be able to be broken out into services to let the legacy system play with newer SOA applications, but a full rework of legacy applications is rarely practical.

However, SOA is clearly the best practice now and all new applications, whether built in-house or acquired from solution providers, should be service-oriented architecture solutions.

Web 2.0 & rich internet applications

Web 2.0 or Rich Internet Applications are generic terms that refer to the use of technologies and methods to bring new levels of interactivity and real-time behavior into browser-based applications. Examples are the use of blogs, wikis, chat, social-networking, photo/video, and voice.

What’s new is not so much the technical capabilities themselves, but the new forms of mass use that have sprung up as internet access has expanded past critical mass. People have been sharing files and chatting over the internet for decades. But now it is so common and standard that internet applications are being built around these capabilities, with documents and streaming video and chatting as a part of the application interaction. Witness Facebook, dating services and even the NBC Olympics website.

Similarly, Web 2.0 offers new options for how we do business in the insurance space. We can incorporate chat, real-time notes, flexible file/video attachments wherever they can improve the quality and/or speed of the process.

Configurability

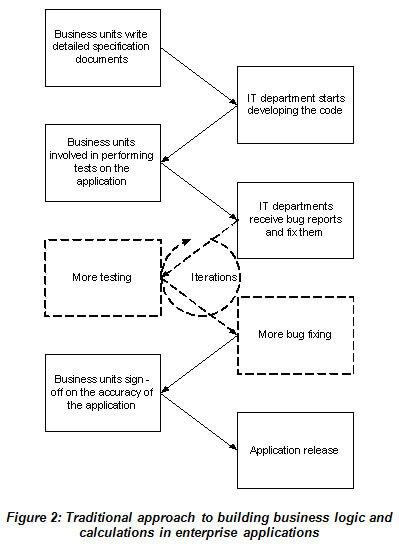

Configurability refers to the ability to specify or change details of a system without having to touch the underlying base code of the system. The concept is not new – vendors have talked about being configurable for a couple of decades. But both the breadth and the ease of configuration has improved dramatically in the last several years.

In the past, configurability usually referred to the ability to redefine the values of a few fields to fit a carrier’s specific data requirements, or a control table that would direct processing between a few pre-defined paths. But now you have the ability to truly define or redefine any and all the data elements, values, supplemental data, screens and screenflow, edits, risk selection and appetite rules, underwriting guidelines and best practices, straight-thru processing, assignments, referrals, users, permissions, letters of authority, and the internal and external services you want to perform. Before, you could tweak your hard-coded process with a few variations. Now you can configure virtually your whole process for each line of business, geography, distribution channel, and even each individual.

Configuration has gotten more powerful and much easier. In the past, the “configuration” was done by the vendor’s programmers, either in native programming code or through a proprietary pseudo-code. Today, the advanced solutions in the marketplace offer point-and-click configuration tools that allow business system analysts or developers to specify what should happen.

Configurability is another best practice that carriers should insist on as they look at new solutions. (But make sure you get to see and try it – everyone says they are configurable, but what they actually offer varies widely.)

Rules & workflow engines

Rules and workflow engines allow the definition of specific business rules and/or process workflows separate from the system’s data and screen handling.

This segregation of the rules and/or process steps allows for easier modification of the rules and/or process without having to change the underlying base code. For instance, if the carrier decides to tighten their underwriting rules, change their assignment rules, or tweak their scheduled credit ranges for a specific territory and class, the change may be made to the appropriate rule or workflow, and the application will automatically absorb that change every place the application uses that rule or workflow.

In addition, these engines permit separate and more effective management and facilitate re-use of the business rules and workflows across the carrier’s entire business operation. (Rules and workflow engines are different from each other, though in some installations they overlap, but are similar in how they relate to the business application.)

Separate external rules and workflow engines have been available for many years. But, in reality, their effectiveness in insurance applications has often been limited. Traditionally they have been toolkits with little or no applicable insurance content out-of-the-box. As a result, you would have to build a new application from scratch, or you would have to integrate the external rules/workflow into your existing legacy systems. Either approach involves significant cost and time. In addition, often you would find that you can’t efficiently invoke the rule/workflow engine everywhere you would like without prohibitive performance overhead (e.g., invoking a rules engine at the field level).

In recent years, however, modern configurable solutions are increasingly emerging with embedded rules and workflow capabilities. These products offer the necessary level of rule and workflow management while also providing standard insurance rules and workflow out-of-the-box, allowing configuration of company-specific rules and practices, and performing efficiently at any level in the application (e.g., pre-screening, field-level, screen-level, assignment, quote, referral, etc.). This can enable the carrier to implement a modern solution with configurable, embedded rules and workflow in a much more reasonable time and cost.

Underwriting 2.0 – the platform of the future

Okay, so that’s the technology with all of its marketing glory. But let’s be real. What can these emerging technologies do for our process? Can they bring all our islands of automation into a coherent, efficient underwriting process? How will they really improve productivity and quality for the underwriter and the agent? What can the modern process be like?

Above we reviewed some of the “don’ts” that have plagued our workflows for the last few decades. Let’s start looking forward and defining some “do’s” as principles for our future underwriting process.

- Everything you need to see in one place (not in different systems, email, the fax room, your in-basket, the document management system, etc.).

- Everything in 1-3 clicks – everything!

- (account, submission/policy header, application, correspondence, attached files, web service reports, external system data (loss history, loss control, payment history), underwriting worksheets, rating worksheets, predictive. analytics model results, rating, rating factors, quotes, ….).

- Everything is accessed and updated real-time.

- Everyone is notified of everything relevant, immediately.

- Underwriters and/or agents can work with each other, not at each other, in one process (notes, chat, shared view/update, instant update and communication).

- Multiple accounts open at once (a click away).

- Straight-thru processing for the clear winners and losers and, for the rest, everything set up in one desktop for the underwriter.

- Automatic advice and reminders for the underwriter based on account characteristics or activity.

- Intuitive, easy-to-learn, easy-to-use (insurance terminology, no Save buttons, even a configurator designed for real insurance people and processes).

- No re-entry – ever.

- Configurability to keep the system current with the business needs and opportunities.

These are all possible today. The technologies are available now, and people are using them to do exactly these kinds of things (though not always in insurance). And they don’t require tens of millions of dollars and years of waiting. The first step is realizing that this is the business process you want.

What you need is a single platform, an integrated desktop, a control station for the underwriter and the agent that has all the necessary steps and resources right there. Tasks that don’t require human intervention happen automatically ahead of time. Tasks that require professional judgment or decision are automatically queued up for the underwriter and agent – with all the appropriate research, background information, and pre-analysis needed to make the best possible decision available at the click of a mouse. This new desktop and process is integrated with and leverages the carrier’s existing systems, data, rating, forms, models, and knowledge resources. Communication and collaboration with others is instantaneous and part of the account record. The platform and the process are intuitive for underwriters and agents. And all aspects of the desktop and the process can be adjusted, added to, or redirected as fast as the market changes.

Let’s now explore in more detail how this type of platform works and what it delivers.

Agent productivity & ease of doing business

Underwriting 2.0: The agent can upload a submission from their agency management system or can easily enter a submission from scratch. The entry process and screens are intuitive so agent training and errors are minimal. The agent desktop provides quick pre-qualification and risk appetite feedback so the agent doesn’t waste time submitting risks that the carrier is not interested in. Supplemental data is prompted for at entry while the submission is still in front of the agent. Electronic documents, photographs, loss runs, and notes can quickly be added to the submission as a part of entry. When the agent submits the risk, it goes directly to the appropriate assistant’s or underwriter’s desktop and the receipt is confirmed to the agent instantaneously. The entire process of submitting a risk, including supplemental data and attachments, only takes 5 – 15 minutes from the agent’s desktop to the underwriter’s desktop.

Quotes (including multiple quotes and quote options), agent responses, re-quotes, bind requests, and binders are prepared and delivered in real-time. Now the agent and underwriter can work through a rush quote much more efficiently and accurately, collaborating and communicating together on the same system.

For example, the new platform utilizes immediate alerts and notifications, notes, live chat (like Instant Messenger), email correspondence, shared viewing and update of the account. This enables the agent and underwriter to resolve questions and move the account along as fast as possible without time-wasting email, fax, and voicemail delays and constant account pick-up/put-downs and handoffs.

The Result: The agent wants to bring business to you because he/she can get a confirmation, quote, and binder from you faster and more efficiently than with any other carrier. Both sides benefit and help each other succeed.

Underwriter productivity

Underwriting 2.0: The system automatically prepares the risk for the underwriter’s consideration. Leveraging its SOA platform, the platform can pre-assemble carrier system data (e.g., loss history, loss control, payment history), web service data (e.g., MVR, Xmod, financial, geo-code, etc.), and predictive analytics results, or it can allow the underwriter to select what information is appropriate for this risk. In addition, the desktop analyzes the submission to either highlight risk conditions or characteristics for the underwriter’s attention or to require a referral based on the carrier’s underwriting best practices, knowledge base, and the underwriter’s letter of authority. Given all of this information about the account, using its embedded rules capability, the desktop advises the underwriter or automatically drives the appropriate processing for the risk. The platform also can screen out clear winners and losers for straight-through processing before the underwriter has spent any time on the risk, can also present it to the underwriter with best-practice advice, or can flag the account to require a referral.

These features allow the underwriter to spend more of his/her time underwriting, concentrating on the risk characteristics and the appropriate price. Everything the underwriter needs is on the desktop, just clicks away – the complete application, attachments, notes and chat, external web reports, underwriting guidelines and best practice checklists, rating and pricing, quote and bind capabilities, issuance, endorsements, cancellations, renewals, and dashboard visibility.

For example, the underwriter prepares any worksheet items that have not been prefilled and generates one or more quote options and proposals in real-time. If a referral is required, the full account and all of the backup information can instantly be placed in the referral underwriter’s queue for their review and decision.

Once the quote is released to the agent, an alert pops up on the agent’s desktop and an email is sent to the agent with the quote attached to notify them immediately. The agent and underwriter can now collaborate through chat or notes and can share views and updates of the risk. This helps the underwriter to instantly respond and modify the quote if appropriate, lets the agent accept the right quote, and lets the underwriter close the business in real-time.

Finally, when the underwriting process is completed, all the policy information and documents are passed to the carrier’s existing systems of record so the existing processes and systems are not disrupted. Throughout the entire underwriting process, all information and actions are saved in a detailed audit trail for reference by the underwriter, the referral underwriter, a claims adjuster, loss control, billing, and auditors.

The Result: The Underwriter spends more time underwriting, handles more quotes, and writes more business. Setup activity is automatic, incorporation of web data and carrier knowledge happens in real-time, communication is instantaneous, and the agent gets their response as quickly as possible. Ultimately, agents bring you more business because you get them an answer first.

Underwriting quality/discipline

Underwriting 2.0: The underwriting desktop needs to enforce quality as well as productivity. Quality underwriting is the key to an insurance carrier’s profitability. This platform will use its embedded rules engine and the external data from web services and the carrier’s systems to guide and enforce best practices throughout the underwriting process. Every step of the process is assisted by contextual business rules that advise the underwriter and/or drive the process – the initial screening of the risk, the analysis of the risk characteristics, the knowledge-based reminders, assigning appropriate tiering/rating/pricing factors, checking electronic letters of authority, and automatic referral flags.

The Result: Quality is built right into the process. Underwriters are advised and directed in accordance with the carrier’s guidelines and best practices every step of the way. Rather than relying on the underwriter to find and use paper- or email-based directives and after-the-fact audits, or forcing all risks through a referral process to ensure senior underwriters’ review, the desktop will lead every underwriter through the carrier’s approved risk analysis and pricing regimen. The carrier’s book will be accurate, consistent, and auditable.

Incorporating predictive analytics

Underwriting 2.0: Predictive analytics brings sophisticated analysis into the underwriting process, but only if it is used. Rather than modeling being a separate activity that involves additional work, the new platform will incorporate predictive analytics. The underwriting desktop can then directly apply model results to screen risks out, qualify them for straight-through processing, alert the underwriter to the key risk characteristics, pre-fill rating and pricing factors, and/or mandate referral processing. Having the best information and analysis available lets your underwriters assign the best price – aggressive pricing for the winning accounts, and defensive pricing for the marginal accounts.

The Result: Incorporating predictive analytics into the underwriting process helps the underwriter write better business at the best price. Precision pricing on top of informed risk selection and underwriting quality will produce the most profitable book of business.

Actionable knowledge management

Underwriting 2.0: So often, a carrier’s underwriting knowledge and experience is locked up in senior underwriter’s heads or buried in underwriting manuals and email archives. The new platform leverages this intellectual capital within the underwriting process. By capturing and presenting knowledge items within context of specific risk criteria, they become actionable – suggesting attention to specific characteristics, requiring specific action, enforcing a referral, or performing an automated function. Every underwriter will receive the benefit of the carrier’s best underwriters’ guidance and best practices as they are underwriting an account.

The Result: Retaining the knowledge of our senior underwriters and training our junior underwriters is one of the major challenges in our industry today. Capturing and presenting underwriting knowledge through the underwriting desktop protects and leverages this most valuable asset, giving your junior underwriters the benefit of your best underwriters’ wisdom and experience where it matters most, right within the underwriting of the account. Actionable knowledge management will improve the quality of the book of business, preserve the carrier’s knowledge assets, and enable easier training of junior underwriters.

Visibility

Underwriting 2.0: In today’s insurance world, everyone needs to know how they are doing against their goals. The new platform will track and display everything that has been processed through a real-time dashboard. Both individual underwriters and underwriting management have detailed, easy-to-read, and configurable displays of key metrics such as item and premium counts, ratios, and turnaround time. Further drill-down into those metrics are also available with a few clicks of the mouse.

The Result: Underwriters and managers now have real-time statistics that reflect what is being processed and written, enabling them to recognize and respond to their own progress as well as market changes and opportunities.

Configurability

Underwriting 2.0: Even while the new streamlined process is being laid out, changes are inevitable. As such, the new platform can’t be a rigid solution that requires costly and time-consuming intervention to manage any such changes. It needs to be able to incorporate new information, new rules, new knowledge, and new services with ease – through simple configuration – in order to keep the underwriting process current.

A truly configurable system enables changes to data, screens, edits, rules, documents, and screenflow to be implemented quickly and accurately by business analysts with only modest technical skills. When the market changes, the carrier’s appetite or capacity shifts or new opportunities arise, the underwriting desktop can be changed on the fly with them.

The Result: The ability to quickly respond to the market changes and position your products and underwriting attention to new opportunities before your competition provides a clear competitive advantage.

Modernize, optimize, transform – start now

Can you underwrite business as efficiently and effectively as you think you should be able to? Or, are you constrained by your existing processes and systems?

Are your underwriters spending most of their time underwriting? Or are they chasing information and doing an hour of setup and data entry for every half-hour of true underwriting?

Do your agents consider you their carrier-of-choice because you make their job easier and help them succeed? Or do they think you are hard to do business with, so you have to constantly press them for their quality submissions?

Are you leveraging your underwriting knowledge and best practices to write the best business at the best price? Or are you just doing pretty well with what you have to work with? Do you even really know?

Modernizing and optimizing your process can transform your business.

- Because you help agents to be more productive in getting answers to their customers, more business will come in.

- Underwriters will be able to focus on underwriting and handle more submissions in less time with better quality. Yes, underwriters will be able to write more business – and better business – at the best price.

- Managers will finally be able to see across all lines of business, react in real-time, and deploy a true enterprise underwriting strategy.

These platforms are all within our reach today, but only if we are willing to transform how we process our business.

Stop looking at the underwriting process as just data entry for the policy administration system – it is a unique business process with a unique set of demands and goals.

Stop investing your energy and resources in small enhancements to the same constraining workflow – tantamount to “paving the cowpaths” – and start thinking differently about how you would process if you had that magic wand.

The best time to modernize and optimize is when it helps you lead, not when you are trying to catch up. The possibilities are here, now. And if you don’t seize them, your competition will. The first step is to define the business process you want. So get started – thinking, talking, planning, and acting.

Edward Gray is the Director of Customer Solutions for FirstBest Systems in Lexington, MA, where he works with customers to develop a shared vision for how an underwriting management system can bring real-world productivity and quality benefits to the carrier’s internal and agency operations. Ed has more than twenty-five years of insurance expertise in Information Technology and Business Operations with carriers and brokers, including roles as CIO, COO, and Senior Vice President of Operations. He has extensive hands-on experience in system and business process architecture and re-engineering in policy administration, claims, billing, reinsurance, accounting, and management reporting areas, so he has seen what does (and doesn’t) deliver real value to the insurance organization. Ed would be happy to hear your thoughts on the underwriting process.